- Scribes

- Visibility

- Speech Recognition

In the Beginning: VoiceEM

Back in 1988, Ray Kurzweil came out with speech-recognition software, including something called VoiceEM. It was an emergency medicine physician charting application; there was also VoiceRAD for radiology. These were DOS programs that ran with that typical DOS 80 character x 25 character text screen. In addition to having a medical-specific vocabulary, VoiceEM had another advantage over the retail shrink-wrap Kurzweil Voice: you could access your voice profile (what the program learned about your particular voice, and the new words you had taught it) from any PC on the network. And, as it learned more about your voice, and as you taught it more words, these were saved back to the server, so you could access this updated “profile” from the network when you started up at the beginning of the next shift. VoiceEM alsom allowed electronic signature, saving your charts on the server and sending them through an interface to an electronic medical record (EMR) system.

VoiceEM employed a speech recognition engine developed by Kurzweil and his engineers. It was advanced for the time but crude by today’s standards. It only recognized

one

word

at

a

time.

The recognizer wasn’t all that good, but if you gave it a choice of just a few words, it could figure out pretty easily what you said. It was even quite good at discriminating between fifty or a hundred words.

To leverage this, Kurzweil added Applied Intelligence. (Applied Intelligence is Artificial Intelligence, only with more engineering and less hype.) They analyzed ED charts, and created templates for the most common presentations.

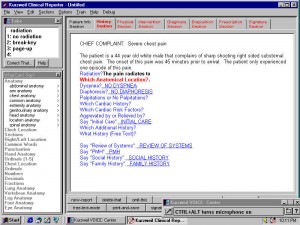

Let’s take, example, a “chest pain” template. (I’m grossly oversimplifying so it has only a passing acquaintance with the real template, which appears in the screenshot of VoiceEM’s successor Clinical Reporter, but bear with me, it’ll work for an illustration.)

The template looked like a regular ED note on screen, only with fill-ins (blanks) that could be filled in by voice. When you tabbed to a fill-in, a list of potential choices appropriate to that fill-in would appear along the right side of the screen.

The patient presents with a chief complaint of chest pain. The pain is [ ]. The chest pain started [ ] ago. The location is [ ]. Associated symptoms include [ ]. The pain [ ].

When a fillin was highlighted, you could use your voice to pick from choices, for example

“severe” or “moderate” or “mild”;

“pressure-like” and/or “sharp” and/or “burning” and/or “pleuritic”;

“one” or “two” or “three” etc., and “hours” or “days” or “weeks”;

“left” or “right” or “substernal”;

“shortness of breath” and/or “diaphoresis” and/or “palpitations”;

“radiates to right arm” or “radiates to left arm” or “does not radiate.”

(for some of the fillins, you could only pick one choice, and then the cursor would move to the next fillin; for others, you could pick multiple choices)

Given charting chest pain was pretty simple and standardized, you could just say the following, tabbing to the next “fill-in” (blank with an associated short list of possible answers):

“chest pain” TAB

“severe” TAB

“pressure-like” TAB

“two” “hours” TAB

“left-sided” TAB

“shortness of breath” “diaphoresis” “palpitations” TAB

“does not radiate” TAB

and the like. This then would then generate a chart that looks like this:

The patient presents with a chief complaint of chest pain. The pain is severe. The pain is pressure-like. The chest pain started two hours ago. The location is left-sided. Associated symptoms include shortness of breath, diaphoresis, palpitations. The pain does not radiate.

“Free-Text-Mode” and Continuous Speech Recognition

With Kurzweil VoiceEM, you could even go to “free-text-mode” and dictate

word

by

word.

This “free-text-mode” had the dual disadvantages that it was painfully slow, and, since the recognizer had to choose from a very much larger vocabulary than in the fillins, it was much more prone to misunderstanding you. But for some complex charts, you had to use it. Or type. I think we all typed faster than this original “free-text-mode” so that’s what we did. One of our docs used the Dvorak keyboard layout, and I installed a macro program to switch the keys for him; this way he could type a lot faster.

Even today, working as an ER doc requires typing proficiency. I think that all applicants for our group should have to take a typing proficiency test, and they have to do better than 35 words per minute to be considered. I type about 90 WPM. I credit my mother with this. When in high school, she made me take a typing class. I was outraged, until I realized the advantages of being the only male in the class.

Overall, VoiceEM worked pretty well for simple, straightforward charts. Even for those charts, it was slower than handwriting. But much more legible! I remember when one of my partners was handed one of his own handwritten charts for a QI (quality improvement) review: “What? You expect me to be able to read this?”

Continuous Speech

Later, Kurzweil started working on continuous-speech recognition. About the same time, a group of grad students at Carnegie-Mellon University were working on continuous-speech. Living close to CMU, I got to attend some seminars of the working group. The CMU project got spun off as an independent business, something called Dragon Dictate. Typical grad-student name, don’t you think? Later it became a commercial product.

Both the Kurzweil and Dragon continuous-speech recognition programs were built on a trigram model: each word is evaluated in the context of the word before and the word after. This improved recognition massively compared with Kurzweil’s original word-by-word recognizer. Having a vocabulary based on actual emergency medicine reports allowed fine-tuning of both the vocabulary as well as improving trigram recognition, as Clinical Reporter was preferentially listening for the most commonly-used emergency medicine phrases. There were problems with this model, however; the vocabulary was computer-created from analyzing many, many ED reports without much human oversight. This resulted in a “dirty” vocabulary, with some occasional proper names and misspellings creeping in.

Kurzweil’s technology and business got bought out by a Belgian company called Lernout & Hauspie. So there was an upgrade of VoiceEM called Clinical Reporter. It still had all the templates – in fact, even better templates, and more of them, and they were customizable. It switched from being a DOS program to a Windows program, but otherwise was much the same.

Two words = $$$$$

Customizable templates were important to us. For example, we realized that we were very poor at billing for critical care, which pays better than “regular” care. ER docs do all sorts of things that are billible as critical care (like taking care of bad asthma patients) that are easy for us. It’s billable as critical care, but the care is so routine for us that we forget to chart the language about critical care.

At the bottom of our standard ED provider charting template, we added a reminder:

[Critical Care?<>]

This was a non-printing prompt. If you ignored it, it would disappear when you signed and closed the report. But if you highlighted it and said “Critical Care” then this would appear:

CRITICAL CARE: The aggregate critical care time was [<30>] minutes of direct attention while the patient was in the ED, under my care, addressing the stabilization of multiple systems. My care included the history and physical examination, patient management, patient reassessment, interpretation of diagnostic tests, coordination of care, discussions with family, and decisions regarding patient treatment and disposition.

The month after we added this to our standard template, our critical care billing went up by 13%. Pretty impressive change for adding two words and a question mark! This illustrates one powerful feature of templated charting systems: reminders. (There are disadvantages of templated systems, too; we will review them in a subsequent post.)

Many of my partners complained about the slowness of charting with Clinical Reporter, but the complaints were quite muted (for a few months, at least) after our billing went up so sharply. You could also add in reminders about things like aspirin and nitrates and beta blockers for chest pain/myocardial infarction, which helps with charting compliance. Also, occasionally, with improved patient care as well; you’re charting and suddenly realize you’ve forgotten something and ask the nurse “would you put half an inch of nitropaste on the patient?”

About the same time (late 1990s), several competitors to Kurzweil appeared, and we demo’d a few of them. Many of them had innovative features. We were looking at them as a potential replacement for Clinical Reporter, because:

Of Mergers and Acquisitions and Criminals

In 2000, Lernout & Hauspie went on a shopping spree. They bought Kurzweil. They bought Dictaphone (who had just bought Articulate Systems and their continuous speech recognition, one of those competitors we were looking at). They bought Dragon, with their successful retail speech recognition product. L&H brought out a highly-effective continuous-speech recognition product, VoiceExpress. I used this at home; it wasn’t bad. Their stock soared. Any competition (that they hadn’t already bought) withered on the vine.

But the next year, it all fell apart. The principals were sentenced to jail over fictitious sales figures from Korea. The stock tanked. The company went bankrupt. The EDs using Clinical Reporter all followed the news with a kind of horrid fascination.

Nuance Takes Over

Scansoft, the makers of the leading Optical Character Recognition (OCR) program, Omnipage, acquired Lernout & Hauspie’s speech-recognition technologies, and changed their name to Nuance (what a bad name) along the way. They continued to market both retail and medical versions of Dragon. The medical version of Dragon was basically the standard retail package but with a medical vocabulary added and the price multiplied by a factor of 10. They even offered specialty-specific vocabularies, including emergency medicine. However, the Dragon product, unlike VoiceEM and Clinical Reporter, wouldn’t save your voice profiles to the server and allow you to load it from different PCs, nor would it send reports through an interface to your EMR system. (Years later, Nuance finally did start offering a medical version of Dragon with some network management capabilities.)

Nuance killed Clinical Reporter. They abandoned all the Applied Intelligence (templates) that Kurzweil and then the Clinical Reporter team had developed.

Too bad. Many of those templates were quite finely tuned and had a lot of smarts in them. They also abandoned Kurzweil’s work on a real-time program to analyze any text chart and code it for billing, giving advice if the report was close to but not quite up to a coding level. I used a beta version and it worked quite well. The code for this was developed based on a NIST (National Institute for Standards and Technology) grant, so I suppose the code is still available somewhere in the public domain for any enterprising individual to take and develop further.

Nuance took technology acquired from Dictaphone and started marketing a program called PowerScribe.

This inherited nothing from Kurzweil or Clinical Reporter, it was a brand-new and totally different program. PowerScribe had a simple template system (much cruder than the Clinical Reporter templates), but no predefined templates. Later, they collected templates from existing users and started providing new users with a few basic templates, but nothing like what Clinical Reporter had. There was no way to tie a particular list of words to a fillin; each fillin was listening using the full vocabulary, and without a list of preferred words, and with the standard trigram model of continuous speech recognition, uttering a single word in a fillin results in frequent misrecognitions. However, Dictaphone, being part of Nuance, got to use the latest and best versions of the Dragon speech-recognition engine (recognizer). However, over the past few years, they’ve been slack in rolling out upgraded recognizers for Powerscribe and then its successor, Enterprise WorkStation (EWS).

I’d worked with one of the Kurzweil/L&H engineers testing different microphone designs, including custom designs with separate microphones on the back of the microphone, trying to get good recognition despite:

- one male nurse with a booming voice that could be heard throughout the ED even when he was speaking softly,

- trauma patients and trauma surgeons each trying to outshout the other,

- people with kidney stones loudly retching, and

- demented nursing home residents screaming “HelpMeHelpMeHelpMeHelpMe!”

We found one, a customized Philips Speechmike, that worked pretty well. We also traced some of the problems down to poor design of many sound cards, which introduced noise into the mike input.

Powerscribe had many disadvantages compared with Clinical Reporter, but one great advantage: the Dictaphone USB microphone. This microphone, combined with a good sound chip on the motherboard (almost all sound chips are good now, designs have improved) had truly superior noise rejection, and provided excellent recognition. We use this mike in the ED to this day.

As with VoiceEM and Clinical Reporter, Powerscribe saved voice profiles on the server. It also offered an interface to the registration system (so you could pick a patient from a list) and to the EMR system (so completed reports flowed seamlessly into the EMR).

However, Nuance abandoned Powerscribe for emergency medicine, though it’s still supported for radiology. Instead, they offered a program called Enterprise Workstation, or EWS. EWS is designed not just for the ED but for use throughout the hospital or clinic. As with its predecessors, it offers management of voice profiles of the server, and interfaces to registration and EMR. To the end-user (us) it looks, and acts, just about the same as Powerscribe.

EWS is a front end for all Nuance/Dictaphone speech-to-text. There are three primary modes for converting speech to text and correcting errors.

- The speech may be self-corrected on the screen, which is what I’ve always done in the ED. Reports are then immediately available on the EMR, which is important for admitted patients and even those discharged from the ED who show up at an outside doctor’s office the next day, or return to the ED the next day. It also allows visible templates as reminders.

- The speech may be created onscreen using templates, and then sent electronically to a correctionist who cleans up the dictated text. There are no “transcriptionists” any more; essentially all speech-to-text, whether you dictated into a microphone on a computer or into a telephone, is processed first by speech-recognition software.

- Or, you may simply dictate a complete note without looking at the screen and rely on the correctionist to clean it up.

Options 2+3 entail more expense and delay. The shorter the delay, the higher the cost: transcription (correction) companies charge more for rapid turnaround.

In September 2010, Nuance introduced the Dragon Medical Enterprise Network Edition vSync (what a mouthful) which allows dictation directly into many common enterprise EMR systems, working over Citrix, as well as providing centralized updates and profile management. I have not seen this in action, and am not privy to much in the way of details, though there are rumors that a healthcare system where I work is investigating it as a replacement for EWS in the next year or two. As near as I can tell, this will offer profile management, but no chart management, as the underlying EMR provides that; it will also offer no templates, again depending on the underlying EMR for that. That means integration of templates with speech recognition is likely to be poor, unless the institution and the EMR vendor both work hard on this, with careful attention to user interaction design. Based on past experience, this seems unlikely. But I will keep hoping to be pleasantly surprised.

Quite a few vendors have “integrated” Dragon into their point-and-click EMR systems. But rather than a tight and user-focused integration, it usually has all the disadvantages of both point-and-click and of speech-recognition charting. It doesn’t leverage speech-recognition in an intelligent way, as pioneered by Ray Kurzweil in his original VoiceEM and subsequently Clinical Reporter.

Thus far, I have written only about the Kurzweil > L&H > Nuance/Dictaphone lineage of medical speech transcription. That’s because there is no real competition. IBM had something called ViaVoice, but sold it to Nuance in 2003. Philips used to have its own speech recognition engine but now their speech-recognition products use the Dragon engine. All those other products that we were looking at in the late 1990s? They’re long-gone.

Which is too bad, because Dictaphone could do with some competition. Competition makes product managers focus on the user experience and user interaction design improvements. They neglect these when there is little competition.

Why Speech Recognition is Inferior to Other Charting Methods

As with any charting system, there are disadvantages to speech-recognition. For some people, recognition is poor, though this tends to be with those for whom English is a second language. It’s not a matter of accents; the current Dragon engine compensates well for accents. The problem is with grammar. German-speakers seem to have the worst of it: if you reverse your sentence order from the standard English order (“She threw the ball”) to be like the German order (“She the ball threw.”) then the recognizer gets confused.

A bigger problem is proofreading. Professional correctionists are good at proofreading. Doctors, especially frequently-interrupted emergency physicians, are not nearly as good. And, the recognizer definitely has a dirty mind.

I remember one time that I was reviewing a note sent to me by one of our senior residents, who was a bit on the stoic and hard-bitten side; he’d worked as an big-city police officer before medical school. He had dictated a note on an obese woman who had a rash under her big, protuberant abdomen. He had dictated “The abdominal exam was notable for a large pannus.” Later, he came out of a room to see me in front of my PC, with three or four residents looking over my shoulder, all of us laughing. He saw the misrecognition (I told you the recognizer has a dirty mind) and turned bright red. I think it’s the only time I saw him embarrassed during his residency.

I have seen many implementations where speech-recognition is grafted onto a point-and-click charting application. Some of these work, but none of them seem to me to work all that well. These seem to have many of the disadvantages of both point-and-click and speech recognition. We know that switching modes slows you down, both physically and mentally. There are opportunities here for entrepreneurs interested in smoothing this user interaction and making a successful blend of speech recognition and templates.

Why Speech Recognition is Superior to Other Charting Methods

Immediate Availability

Unlike with speaking into a telephone, where it takes hours or days for the chart to be available, speech-recognition/self-edit charts are available on the EMR system as soon as you close them. This is big. Think of:

- admitted patients,

- those returning quickly to the ED,

- those following up with their doctors the next day, and

- those calling back with a question right after you’ve left for the end of your shift.

Readability

The problem with most point-and-click templated charting is that it reads like point-and-click templated charting. You can tell. With DocuTAP charting, the support personnel in triage enter the chief complaint, past medical and social history, medications and allergies, and there is no need for me to do anything more than review this by clicking on it. Speeds things up quite a bit. But, it’s a template system. So I find, for example, that the chief complaint is

Patient complains of a Swelling.

With speech recognition, you can dictate a whole paragraph of HPI, or a whole paragraph of medical decision-making, easily. And if you’ve read many ED charts, you realize that these are the only parts that people really read. A good, succinct paragraph summarizing things makes a medical chart much better as a work product. The PCP, the admiting physician, or your partner who deals with the patient right after you leave, will appreciate such.

Yes, you need to use a little peer review and peer pressure to get people to really proofread their own charts, though with Version 11 and above of the Dragon engine, recognition is really quite good. And, yes, the vendor needs to do some user interface modification to promote proofreading. It’s hard to remember where you stopped proofreading when you’ve been interrupted, and that interruption was interrupted by something more urgent, and even that interruption was interrupted. If the vendor were to do something like highlighting all text we’ve dictated, and allow us to run a finger or mouse across the lines as we proofread, un-highlighting them, this might help.

Speed of “Clicking”

One particular fact I mentioned above – that speech recognition can easily distinguish between fifty or a hundred words – is a key advantage of speech recognition templated charting over point-and-click templated charting. That’s because point-and-click charting is limited by the number of words you can see on the screen. And you have to use your foveal vision to scan the screen to find the word you want. It’s slow and limited.

But with speech recognition, you probably already know all the likely words for the fill-in, which means that accessing one of those words is simply a matter of thinking of it and speaking it. This is much faster than having to visually scan a list of words and then use your hand-eye coordination to click on one of those words. And with speech recognition, the word list can be much longer than a list you can see on the screen.

This fact has been too long overlooked as a way to leverage speech-recognition.

If you could find a way to attach a list of words to a fillin of a template, to recognize that they are the most likely words to recognize, you could improve recognition and usability greatly. Unfortunately, no current products do this: the template systems are quite crude, compared with what Ray Kurzweil pioneered.

Speed

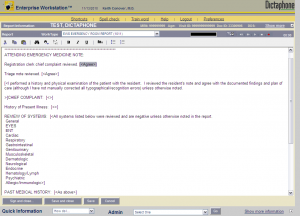

I have found a very effective way to use Dictaphone EWS. I have a standard template – just a slight personal modification of the standard template we all use – shown here:

***************************************************************************

***************************************************************************

ATTENDING EMERGENCY MEDICINE NOTE:

Registration clerk chief complaint reviewed. [<Agree>]

Triage note reviewed. [<Agree>]

[<I performed a history and physical examination of the patient with the resident. I reviewed the resident’s note and agree with the documented findings and plan of care (although I have not manually corrected all typographical/recognition errors) unless otherwise noted.

>]CHIEF COMPLAINT: [<>]

History of Present Illness: [<>]

REVIEW OF SYSTEMS: [<All systems listed below were reviewed and are negative unless otherwise noted in the report.

General

EYES

ENT

Cardiac

Respiratory

Gastrointestinal

Genitourinary

Musculoskeletal

Dermatologic

Neurological

Endocrine

Hematology/Lymph

Psychiatric

Allergic/Immunologic>]

PAST MEDICAL HISTORY: [<As above>]

MEDICATIONS: [<Reviewed and agree with Nursing Notes>]

ALLERGIES: [<Reviewed and agree with Nursing Notes>]

SOCIAL HISTORY: [<As above>]

PHYSICAL EXAM: Vital Signs: [<reviewed nurses’ note>]

PATENT STATUS: [<alert, cooperative, no visible distress, not ill appearing, well-hydrated>] [<>]

[<>][<>][<>]

MEDICAL DECISION MAKING/DIFFERENTIAL DIAGNOSIS: [<Old records reviewed.>] [<>]

DIAGNOSIS: [<>]

DISPOSITION: [<Patient discharged in stable condition. Computer-generated discharge instructions provided.>]

Though the ideal for many is bedside charting, and I’ve done bedside charting with T-sheets, and have seen some hybrid systems that allow some part of the chart to be done via dictation at the bedside, this system is used at a sit-down PC. I’m still a fan of scribbling a few notes on a 6.5×5″ bit of card stock, folded in half, and stuck in my pocket. Even when we had what is arguably the best ED tracking system, Wellsoft, I still used the card in my pocket as a “peripheral brain.”

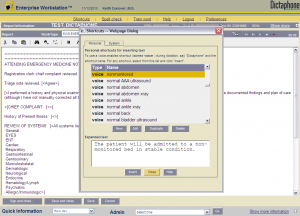

My usual charting strategy is to primarily dictate a long HPI, which includes a bit of social history, and pertinent positives from my review of systems (ROS). I can usually do this paragraph in one continuous utterance. It’s quick. Then, my template says “as above” for both social history and ROS, so I don’t usually need to modify them. I can then dictate a physical exam as follows, using the proword “Dictatphone” to tell the program that each of these is a “shortcut” (macro to programmers). Each of these is a standard “shortcut” that I have personalized to reflect my usual normal exams.

Dictaphone Normal Eyes

Dictaphone Normal Throat

Dictaphone Normal Neck

Dictaphone Normal Back

Dictaphone Normal Chest

Dictaphone Normal Lungs Heart Abdomen

Dictaphone Normal Skin

Dictaphone Normal Extremities

Dictaphone Limited Neurological

I can take a deep breath, and dictate this without stopping. It takes just a few seconds.

One problem with any templated charting system: it’s easy to chart things you really didn’t do. So I dictate a “normal” only for those things I actually examined. As these shortcuts (macros) are expanded, you see something like this on the screen:

THROAT: [<No injection. >] [<No exudate. >] [<No tonsillar hypertrophy. >] [<Airway widely patent. >] [<Uvula is midline. >] [<No tonsillar bulging, retropharyngeal soft tissues appear normal. >]

I then go back, use the mouse to highlight over anything that’s actually abnormal, and dictate the abnormal to replace it, thus:

ABDOMEN: Soft, nontender, normal bowel sounds, no guarding or rebound, no hepatosplenomegaly or mass, no bruit.

ABDOMEN: Soft, mild right upper quadrant tenderness, no Murphy sign, normal bowel sounds, no guarding or rebound, no hepatosplenomegaly or mass, no bruit.

Incremental Chart Completion

One of the great advantages of speech recognition charting, compared to talking into a telephone, is that you can break this process in half. You can start a chart, spend a few seconds dictating just the HPI, and save and close the chart. That way you’ve at least got the history down, and visible to you when you re-edit the chart. This is a great service to those of us who sometimes get back to our charts at the end of a shift and have a hard time remembering: “Was that the first old lady with abdominal pain? Or maybe the third?” Indeed, this ability to suspend charting in the middle is perhaps the most popular feature of speech-recognition charting with our residents. They rotate between our hospital, where we use speech recognition, and another hospital, where they dictate into a telephone. An informal survey reveals that 2/3-3/4 of the residents prefer the speech-recognition charting system, mostly for this reason.

* * *

Overall, in terms of efficiency, usability, functionality, and quality of work product, I know of nothing that works as well for ED provider charting as Enterprise WorkStation. It’s frustrating, though, as the company has done little to improve the product’s user interaction design, and is slow in upgrading the recognizer to the current shrink-wrap retail version. It could be so much better.

I look forward to reviewing the competition, and upgrades to Dictaphone’s charting software, when it appears.

***

Addendum, August 2012: I have hand a chance to work with Dragon’s new Medical Enterprise Medical Edition 10.1. The Dragonbar loads my profile in just a few (less than five) seconds. This is dependent on network topology and speed, but it’s about 10 times faster than EWS loads my profile. The recognition, even with minimal training, is significantly better than the version 8 equivalent engine we were using with EWS. The templates, rather than using the [<EWS convention>] uses simple [square brackets.]This requires a bit of editing.

It’s easy to cut and paste templates (macros) from EWS to Dragon, but I’ve found I need to “launder” through Windows Notepad – cut from EWS, paste into Notepad, then copy from Notepad to Dragon – to strip out font attributes that I don’t want. (Pasting and copying from Notepad is easy if you simply hit control-V to paste, control-A to select all, then control-C to copy.)

We’ll be dictating directly into Cerner Millennium PowerChart 2G, and we’ve set up what is basically a blank template, cut way down on point-and-click to allow us the speed to dictate everything using a Dragon template (“Command”). There are still a few issues to be dealt with, and I will post updates as I have them.

Tags: Trigram, Information Design, Clinical Reporter, Computers, Charting, Information Technology, Medical Charting, Healthcare, Speech Recognition, IT, VoiceEM, Healthcare IT, Kurzweil, Tutorial, Lernout & Hauspie, Usability, Nuance, User Interaction Design, Dictaphone, User Interface

Three years later, and our (UPMC) experience with Dragon integrated into Cerner Powerchart is mostly positive. There still are a few little issues here and there, and it took a couple of years to get things mostly smoothed out. But it’s good enough that when it’s down, and people have to dictate into a phone, everyone groans or says “I’m glad I’m not working _that_ shift!”

KLAS just recently surveyed those who use speech recognition, and Nuance/Dragon clearly dominates in terms of market penetration. However, two smaller competitors have now emerged: Dolbey Fusion SpeechEMR and MModal Fluency Direct. KLAS notes that Nuance tends to nickel-and-dime users with expensive upgrades, and that Dolbey and MModal have higher user satisfaction rates.

Competition is good.