- Brittleness

- Robustness

- Diversity

- “Niche” Computer Systems

- Downtime

- Meaningful Use

- Efficiency

- Anticryptography

- Color

- RHIO

- “Wrong Patient”

- Cognitive Friction

- Dialog-Box Rooms

- Ignore

- What’s in a word?

- ALLCAPS

- Layers

- Consistency

- Menu

- Cost Disease

- RAND

- PHR

- Model T

- Giveaway

- Skeuomorphism

- Icon

- Signal-to-Noise Ratio

- Anti-Data Pixels

- iPhones

- Suicide

- Anthropology

- Wireframes

- Fitts’s Law

- Kludge

- Ebola

- Pop-Up

- Clicks

- Bad Apple

- Testing

- Bold

- Point-and-Click

- Anti-User Pixels

- Flat

- Glucose

Data mining has been a topic of interest to businesses and researchers for many decades. For physicians and other clinicians, and those designing systems for clinicians, data mining has been of less interest. Yes, you can use data mining to predict the volume of patients in your ED by day and hour. Yes, you can use data mining to order supplies more intelligently. But to improve patient care? Not so much.

Data mining has been a topic of interest to businesses and researchers for many decades. For physicians and other clinicians, and those designing systems for clinicians, data mining has been of less interest. Yes, you can use data mining to predict the volume of patients in your ED by day and hour. Yes, you can use data mining to order supplies more intelligently. But to improve patient care? Not so much.

Yes, research using data mining can provide us with some clinical information, but such retrospective studies, especially subgroup analysis, can lead to egregious error, as summarized in a recent article in JAMA. It’s not a replacement for prospective, blinded and randomized studies.

For decades, designers of clinical information systems have been trying to get physicians and other clinicians to use structured data entry. Point-and-click, or in the iPad/Surface era, touch-and-swipe, is a great way to produce structured data. Computer programmers love structured data. Researchers love structured data. Business managers love structured data. The main problems are that (1) physicians and other clinicians are remarkably expensive data entry clerks, and (2) the resulting medical records are of little clinical utility. If you’re trying to care for a patient, or defending a malpractice suit, and looking at a medical record, you’d rather have a single page of handwritten concise notes that 400 pages of structured data. And, especially given the structured methods of nurse charting these days, 400 pages of structured data is what you get. As noted in a previous post here, the signal-to-noise level is very low. Quantity, not quality.

There are potential advantages to structured data entry for medical encounters. If you try to write a prescription for a medication for which the patient is allergic, a screen will pop up and slap you in the face. Metaphorically speaking. This does prevent medical error. But there may be downsides, including alarm fatigue – when the computer keeps alerting you to potential problems, but it’s often wrong. The computer is, in Aesop’s terms, crying wolf all the time.

When trying to use an app – I guess I have to say that “app” instead of “application,” as we are in a democratic society, and the people have voted overwhelmingly for the shorter term – when trying to use an app for structured charting, given the limited choices, it’s like fitting square pegs into round holes. For example, I just saw a patient who is “allergic” to Augmentin. It gives her vomiting, even if she takes it with food. This had been noted in the electronic medical record (EMR) on previous visits. She can take amoxicillin and penicillin without problems. This had been noted in the EMR on previous visits. So why is the EMR so stupid that it always pops up and slaps me in the face with a “NO, YOU CAN’T PRESCRIBE PEN-V-K TO THIS PATIENT YOU IDIOT!!!!”? A good example of trying to fit the square peg of a drug sensitivity into an EMR round hole that can only deal appropriately with true allergy. Another example that happened a few minutes later: a woman who was “allergic” to NSAIDs, but it was simply that she had a mild case Von Willebrand’s Disease and she needed to take them sparingly due to the possibility of bleeding. This was a good example of how you can – and in my opinion, should – anthropomorphize medical computer apps. Apps that are rude, crude, or stupid need to be reeducated (reprogrammed).

So, for structured data gathering when the data may be more complex than can be captured by the data structure, both pluses and minuses. What about unstructured data?

I’ve dictated my ED charts to a computer for decades, and now that the technology has finally improved enough, I can’t imagine doing them any other way. With the improvements in speech recognition over the years, clinicians are rapidly abandoning structured charting apps. Or trying to, their administrators are resisting). So we might as well resign ourselves, at least for physician and other clinician’s charts, to having to deal with unstructured, speech-recognized text.

Or is it truly “unstructured”?

It’s not structured based on clicks on little radio buttons, or text laboriously typed into little boxes on the screen. But there certainly is structure in medical reports. Whether dictated, handwritten or typed, a medical/surgical history and physical usually has structure: a HPI, ROS, PMH, Physical Exam, and either Assessment and Plan or Medical Decision-Making.

Can we take advantage of this structure, and the grammatical structures normally found in language, to analyze and make sense of it? As Bob the Builder says, “Yes we can!” It’s called “reading.”

Sorry for the trick question, the question should really be “can our computers take advantage of this structure” and the answer again is “yes we can” although perhaps not with the explanation point. Can we teach our computers to read? To a limited degree, the answer is yes. It’s called text mining, also known as text analytics. You take unstructured text – also known as free text – and apply natural language processing (NLP). Having an expected format like a HPI, and a context such “ED dictation” helps with the NLP. The output is data. The better the NLP, the better the data; and NLP is getting much, much better, with application of AI techniques such as machine learning.

Back maybe 15 years ago, I had a bit of experience with NLP in a clinical setting. Ray Kurzweil, one of the pioneers in speech recognition, developed an application for Emergency Department charting that evolved into something called Clinical Reporter. The Clinical Reporter team had a NIST grant to develop an add-on NLP module which we used, on an experimental basis, to code our charts. It did a pretty good job.

But now that CMS is talking about getting rid of hospital clinic visit coding, and maybe ED visit coding, by paying the same amount for all of them, maybe we don’t need NLP to help code charts.

Can NLP be helpful clinically? The answer right now is a qualified “yes” and a “not quite yet.”

Can NLP help for clinics who see patients on a regular basis? There are hints that this may soon be useful, useful enough that it may help save lives. An article entitled “Predicting the Risk of Suicide by Analyzing the Text of Clinical Notes” was just published five days ago on the prestigious online journal PLOS ONE. Though clinicians routinely ask psychiatric patients about thoughts of suicide, it turns out that only about a third of suicidal patients will admit it. And identifying patients at high risk of suicide, for more intense monitoring and treatment, remains problematic for mental health professionals. By using a NLP technique called genetic programming, the authors tried to use word and phrase analysis of the electronic medical records to assess risk of suicide. This was a small, retrospective study, and showed only 67–69% accuracy; however, if that is borne out in larger studies, and if it’s prospectively validated, this might make a significant clinical difference in preventing suicide. This study was carried out in the Veteran’s Administration (VA) Health System, and might not be applicable to other health systems; but we won’t know until we try. Speaking of trying, there is something called the Durkheim Project, named after trying to extend this project with VA data to social networks; it’s a project of Dartmouth University and the VA. Quoting from the project website it is named in honor of Emile Durkheim, a founding sociologist whose 1897 publication of Suicide defined early text analysis for suicide risk, and provided important theoretical explanations relating to societal disconnection.

Can NLP help for real-time clinical encounters? Not quite yet. First, in order for NLP to help you with your diagnosis and treatment plan, it needs to analyze your history and physical exam before your formulate your diagnosis and treatmen t plan. Right now, how many physicians and other clinicians chart their H+P before they formulate a diagnosis and treatment plan?

t plan. Right now, how many physicians and other clinicians chart their H+P before they formulate a diagnosis and treatment plan?

For this to work, we need to be able to chart, in free text, as we go along. And , in a busy ED, sometimes you just have to defer charting as something more critical just dragged you away; you want to get the patient discharged rather than having her wait for another hour to be discharged. But, presuming we could get NLP to work before the patient was discharged, perhaps the computer could ask you “did you consider Lower Slobbovian Hemorrhagic Fever?”

Perhaps this is the wrong role for NLP in the acute medical setting.

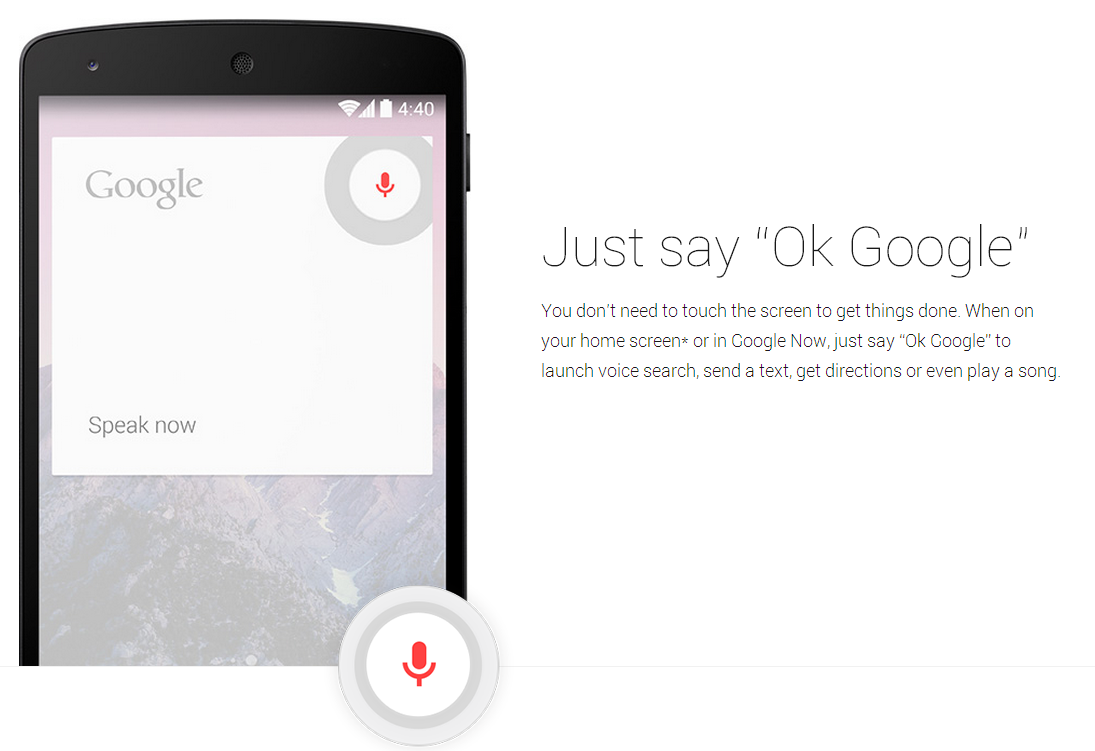

Do you have an iPhone? with Siri? or an Android phone? or Google Now?

Then you may be using NLP all the time. You can talk to your phone and it will answer to the best of its ability. Remember that anthropomorphosing computer programs is good? So, do it with Siri or Google Now. What kind of a person do you think you’re dealing with? If my experience is any guide, you’ll think it’s an idiot savant. Someone who knows lots of things but has no common sense and no understanding of real life or, half the time, what you’re asking about.

But this model, of consulting your NLP phone when you need help, might have promise for clinical use in the ED or clinic in the future. “Phone, I’ve got a 17-year old girl with a possible history of drug abuse, with new-onset psychosis, and a petechial rash on her lower legs. She came in as a Level I trauma, but as it turns out, there wasn’t really any trauma. But her spleen is big on the trauma pan-scan. Any ideas?” “Well, Keith, have you thought about TTP? Neurological manifestations are more common, but psychosis has been reported. And TTP has been reported from injecting the oral narcotic Opana. If the platelets are low when the CBC comes back, you’re pretty much got the diagnosis clinched.” “Thanks, phone, I was sort of thinking along those lines, but I didn’t remember the association with Opana. And yes, the CBC just came back, and the platelet count is ‘pending’ which is a almost sure sign it’s going to be low.” Phones aren’t much use at performing a physical exam, but eventually they might be as useful for diagnosing zebras as they are for finding out where the Spike Jonze movie “Her” is playing. But if your phone is anything like my phone, it’s a long way from the operating system in “Her.”

I was going to close this essay with the phrase “stay tuned.” But there is no need to stay tuned. At some point, when you’re having a hard time with a difficult medical case, your phone will start gently offering you suggestions.

Tags: ED Systems, Emergency Department, Suicide, Computers, Emile Durkheim, ED, NLP, Charting, Natural Language Processing, Information Technology, text mining, Healthcare, IT, Healthcare IT, Tutorial