- Brittleness

- Robustness

- Diversity

- “Niche” Computer Systems

- Downtime

- Meaningful Use

- Efficiency

- Anticryptography

- Color

- RHIO

- “Wrong Patient”

- Cognitive Friction

- Dialog-Box Rooms

- Ignore

- What’s in a word?

- ALLCAPS

- Layers

- Consistency

- Menu

- Cost Disease

- RAND

- PHR

- Model T

- Giveaway

- Skeuomorphism

- Icon

- Signal-to-Noise Ratio

- Anti-Data Pixels

- iPhones

- Suicide

- Anthropology

- Wireframes

- Fitts’s Law

- Kludge

- Ebola

- Pop-Up

- Clicks

- Bad Apple

- Testing

- Bold

- Point-and-Click

- Anti-User Pixels

- Flat

- Glucose

User experience (“UX” to the cognoscenti) is a burgeoning field. Used to be we called this computer usability, user interface design or user interaction design. It was focused mostly on software such as word processors, spreadsheets, industrial control software, airplane cockpits, and medical applications. But, given how much money can be made on the web, UX focus is now quite tightly on web usability, particularly e-commerce websites.

User experience (“UX” to the cognoscenti) is a burgeoning field. Used to be we called this computer usability, user interface design or user interaction design. It was focused mostly on software such as word processors, spreadsheets, industrial control software, airplane cockpits, and medical applications. But, given how much money can be made on the web, UX focus is now quite tightly on web usability, particularly e-commerce websites.

Early efforts at assessing usability were crude. For the most part, they are still crude. Early on, usabilitists (I just made that up) would count the clicks needed to perform a task. Cutting down the number of clicks was a simple way to make at least this particular task faster.

But these days, the Web UX community sees “counting clicks” as unbearably primitive and déclassée. As UX grew out of usability and user interaction design, and focused tightly on web-page design, dogma evolved, including “All pages should be accessible in 3 clicks.” But this dogma was later debunked: “Three clicks is a myth.” When people are browsing the web, more than 3 clicks are fine, as long as you continuously have “the scent of information” – that is, your clicks each result in more, and perhaps more specifically useful, information.

But does this selling-on-the-web principle apply when you’re ordering a complete blood count? Or a chest X-ray? Or trying to view the results? Web UX principles may not apply to medical tasks, or nuclear power control software. But providing the scent of information, or even counting clicks, seem basic to almost any human-computer interaction.

But is a click just a click? Are all clicks created equal? Or, to misquote George Orwell, are some clicks more equal than others? Are some clicks harder, or easier, than others? Should some clicks get a 1.0 rating, and others a 0.7?

Given how many times medical professionals click during a shift, small differences in the speed, difficulty and error rate of clicks can, over the hours and days and months, make a significant difference in productivity. Have you ever had a mouse left-click button switch (it’s always the left one) start going bad: sometimes it doesn’t click, sometimes it clicks twice when you try to click once. This just happened to me last week. It took five times longer to do anything, as I was having to correct numerous click-error. And I had to move to a different computer until we could get a new mouse. Not to mention that some of those click errors could have killed someone if I hadn’t fixed them quickly.

So clicks are indeed a topic worthy of investigation and quantification, with an aim to decreasing the number of clicks, as well as making the clicks better, Web UX dweeb pronouncements notwithstanding.

There is good scientific evidence that the smaller the click-target, and the further you have to move your mouse or finger to get to it, the more likely you will miss it. This is discussed more in the post on Fitts’s Law and linked content. And, as discussed in another post here, selecting among a large number of click-targets also imposes a larger cognitive load, and makes errors more frequent. We also know there’s less cognitive load when a click-target provides good affordance.

We can provide a mathematical summation of all of these to provide a measure of how easy it is to click a particular target. In other words, we can assess some clicks as being more equal than others. Here, then, is a proposal for counting clicks, not as integers, but as numbers with a decimal point and a degree of precision of two places. Even a degree of precision of two might seem presumptuous, but we do need to start somewhere.

Since a click is usually counted as one (1.0), let’s assume that a click with minimal cognitive and psycho-motor overhead counts as 1.0, and additional difficulty layered on top of this increases this number, to 1.1, 1.2, and the like.

Here is a formula, made up with guesses for the coefficients. Research would be required to validate the concept and assign validated coefficients; perhaps a medical informaticist eager for a research project would take this up.

c = a + b*(log2D/W) + d*(n)/W+s

units: cm/cm

c = scaled click

a = affordance, based on this list (I am hoping to instigate geeky flame wars about the right coefficients):

- Underlined blue text with cursor hinting, also known as a hover state: as your mouse moves across the text, it changes appearance: 1.0

- Underlined blue text without cursor hinting: 1.1

- Underlined non-blue text with cursor hinting: 1.1

- Underlined non-blue text without cursor hinting: 1.2

- Non-underlined text with cursor hinting: 1.2

- Skeuomorphic button with cursor hinting: 1.0

- Skeuomorphic button without cursor hinting: 1.1

- None of the above (“you can just click on anything on the screen”): 1.3

b, d = arbitrary scaling factors, values to be determined later

D = distance from the last click (could be on a prior screen, or the same screen, doesn’t matter) in cm.

W = width of click-target, narrowest dimension, in cm as viewed on the screen. The traditional Shannon formulation of Fitts’s Law uses W that is measured along the axis of movement, so this is a simplification that makes measurement easier.

Fingers and hands and eyes are dependent on the size on the screen, and these body parts don’t resize as you resize windows on the screen. W is an anatomic width. Yes, touchscreen finger clicks and mouse clicks are different. We could use different scaling factors depending on whether you’re using a touchscreen that requires a large finger-click, or something that is clicked with a smaller and more accurate mouse cursor. However, for an initial attempt, it seems better to use a single formula and simply interpret the results differently for finger-systems and mouse-systems, as some systems allow users to switch at will between finger and mouse.

n = number of click-targets on the screen/page, a measure of cognitive workload, also known as analysis paralysis.

s = spacing of the click-target: how far it is from the nearest other click-target, in cm on the screen. Closer makes a mis-click more likely.

There are many ways to critique this formula, and I hope people will leave such critiques here as comments. One obvious critique: the number depends on the size of your screen! Yes, but so does click-usability. For comparing systems we could specify a certain size monitor screen.

Thank you for your thoughts.

Tags: Computers, Healthcare IT, Usability, User Interaction Design, User Interface, Human Error, Cognitive Friction

A couple of thoughts, a couple of years after this post.

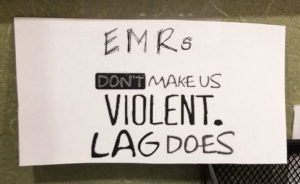

First, the usability of medical software, especially the leading electronic medical record systems, is so bad that we should still be counting clicks.

Second, with these systems, any transition between clicking (or tapping with a finger) and typing should count for several clicks. Not sure how many, maybe 4. But having to switch input modes slows people down and makes them more likely to make an error. And if the performance of the system is so bad that people often have to click something two or three times to get a click to “stick” then each of those clicks, because of the useless pause waiting for it to work, should count double the number of clicks to get it to “stick.”

And if the system is so slow that you can’t tell if your click actually worked – the button doesn’t push in right away – then you keep clicking the button, as quickly as you can, until it actually pushes in; each extra click you can get in counts quadruple. And yes, I have used DocuTAP at MedExpress and for years the performance has been so poor that double- and triple-clicks are common, and I could regularly click that Print button 15 times before it finally pushed in.